The Opinionated LLM Framework for Production Code

BazingAI empowers you to control the context shared with LLMs,

saving time, cost and headaches.

Why BazingAI?

A better workflow for existing apps

It's one thing to use an AI assistant on a fresh, greenfield project. It's another to integrate it seamlessly into a seasoned, production repository. BazingAI is built specifically for the latter—where you need focused context and quality completions without risking your entire codebase or ballooning token costs.

- Not a Chat Replacement

BazingAI is a coding tool that complements your favorite LLM chat interface. Sometimes you need conversation; other times, you need to code. Switch between "Question" and "Code", without the overhead of a full chat environment. - Ecosystem Ready

It works wonders with other specialized tools likev0. Build your UI there, then adapt it and evolve it locally with BazingAI. Because you only feed the specific code you need, there's no extra context or overhead. - Context on Demand

Most AI coding assistants dump your entire project context into every request. That's expensive, noisy, and risks oversharing. BazingAI lets you choose which files or snippets the LLM sees—leading to sharper responses and fewer wasted tokens.

BazingAI Features

Everything you need in a single plugin

Built from real-world experience with large codebases, BazingAI streamlines your workflow, cuts costs, and keeps your app’s core logic secure.

- Multi-File Write on Disk

We’re moving toward gitdiff-based commits. With small, functional prompts, you can quickly revert when something isn’t right.

- Better, Tailored Results

Choose the exact files that need attention, so you get relevant completions—no more dumping your entire repo as context.

- Token Optimization

Compared to vectorizing your entire codebase, we reduce context by up to 80%, saving on costs and trimming unnecessary chatter.

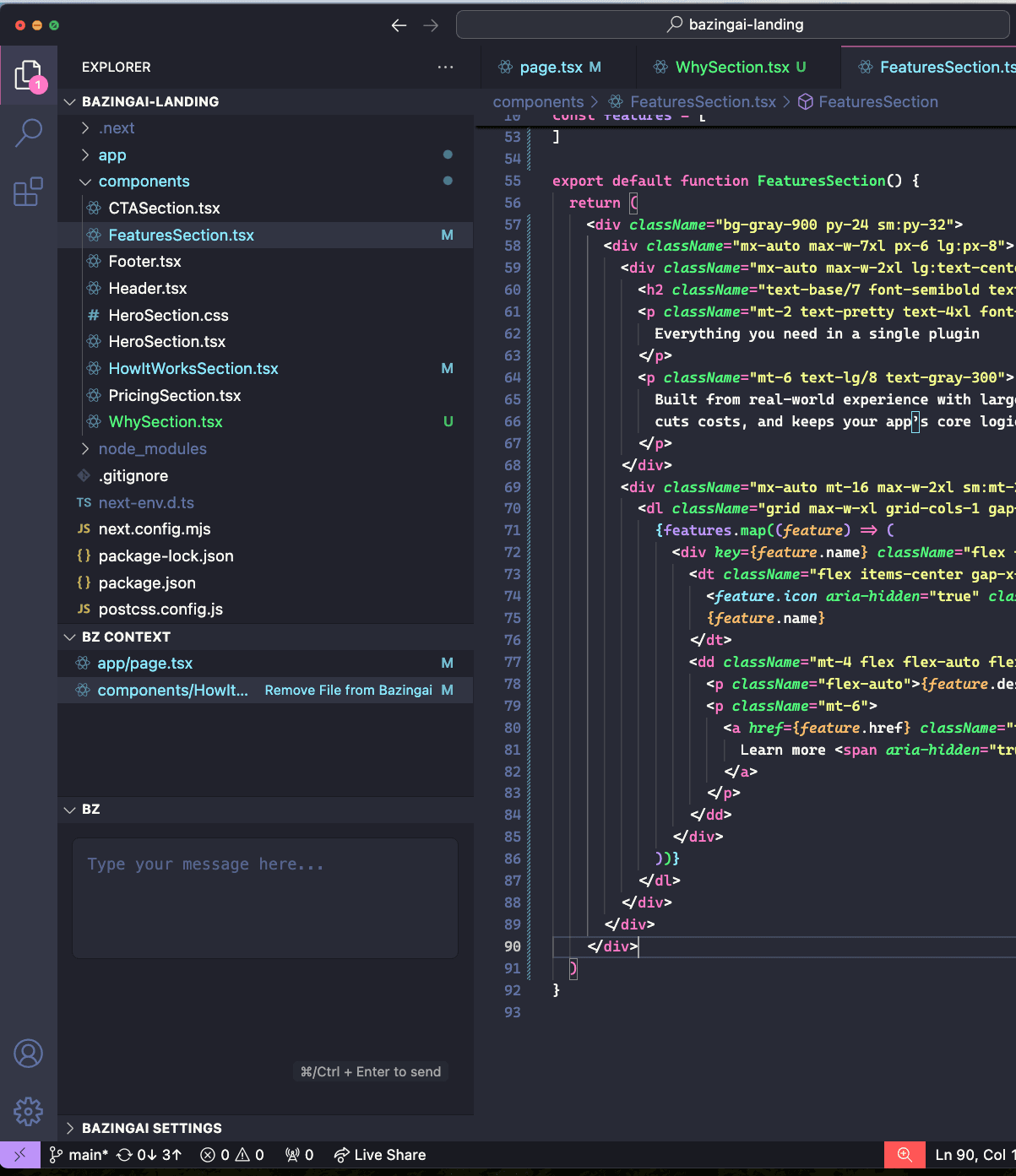

- Integrated within the main Explorer

No need for a separate sidebar or chat panel in VSCode. BazingAI lives right under your file tree.

- Privacy First

Embed only what you want. Keep sensitive logic out of scope and maintain control over what gets sent to the LLM.

- Multi-Model

Every few months there’s a new “king of the jungle.” Easily swap between different LLMs without changing your workflow.

How It Works

Simple, streamlined and dynamic.

- Add/Remove Your Files as Context

Pick the files you want the LLM to access, shortcut friendly:

Add Current File:⌘/Ctrl + Shift + A

Bulk add via fuzzy search:⌘/Ctrl + P Add Files to BazingAI.- Prompt & Generate

Describe your feature, refactor, bug fix, test creation... BazingAI feeds only the relevant context to your chosen LLM.

- Repeat

There is no preview changes, approve or discard... diffs apply in one go. No more massive copy-paste.

Ready to Build Smarter?

Stop sending your entire repo to a black box. Fine-tune your prompts and keep your code safe with BazingAI.

Get Free Beta Access